DeepSeek's surprisingly inexpensive AI model challenges industry norms. While DeepSeek boasts a mere $6 million training cost for its DeepSeek V3 model, a closer look reveals a far more substantial investment.

Image: ensigame.com

Image: ensigame.com

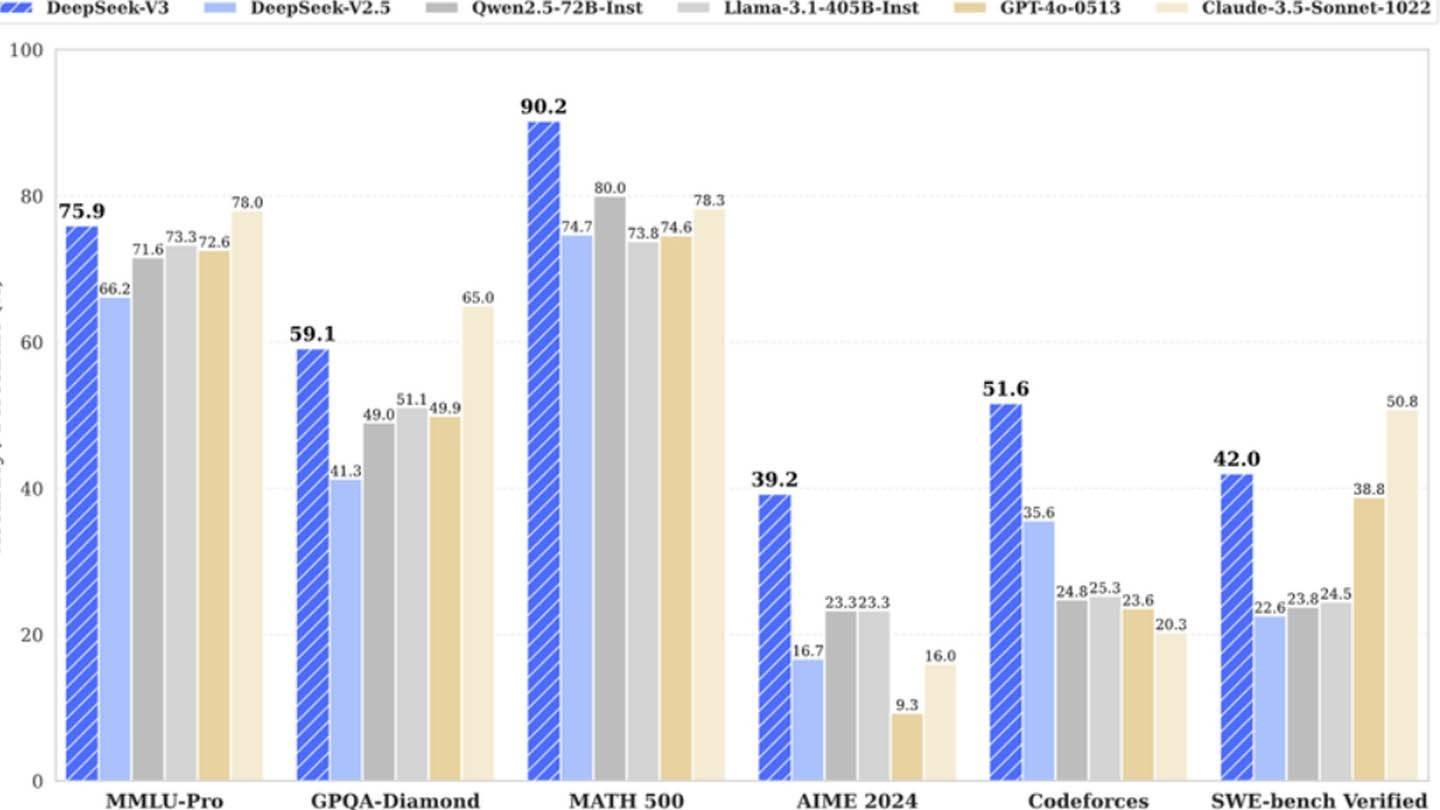

DeepSeek V3 leverages innovative technologies including Multi-token Prediction (MTP) for enhanced accuracy and efficiency, Mixture of Experts (MoE) utilizing 256 neural networks for accelerated training, and Multi-head Latent Attention (MLA) for improved information extraction. These advancements contribute to its competitive performance.

Image: ensigame.com

Image: ensigame.com

However, SemiAnalysis uncovered a massive computational infrastructure: approximately 50,000 Nvidia Hopper GPUs, including 10,000 H800, 10,000 H100, and additional H20 units, spread across multiple data centers. This infrastructure, valued at roughly $1.6 billion with $944 million in operational expenses, significantly contradicts the $6 million training cost claim.

Image: ensigame.com

Image: ensigame.com

DeepSeek, a subsidiary of High-Flyer, a Chinese hedge fund, owns its data centers, providing control and faster innovation. Its self-funded status enhances agility. High salaries, exceeding $1.3 million annually for some researchers, attract top Chinese talent.

Image: ensigame.com

Image: ensigame.com

The $6 million figure only represents pre-training GPU costs, omitting research, refinement, data processing, and infrastructure. DeepSeek's total AI investment surpasses $500 million. While its lean structure fosters efficiency, the "revolutionary budget" narrative is misleading. The true cost is significantly higher, though still potentially lower than competitors. For example, DeepSeek's R1 model cost $5 million, compared to ChatGPT4o's $100 million. DeepSeek's success stems from substantial investment, technological breakthroughs, and a skilled team, not solely a low budget. Nevertheless, its cost remains significantly lower than its competitors.